Glossary: Resource owner = the process that owns mutable state for a domain object. Request owner = the process coordinating one user request end-to-end within a domain. Adapter = the boundary process that talks to an external system. Orchestration = the domain that coordinates multi-step work across other domains. PSP = payment service provider (card processor); the PSP adapter isolates that boundary.

Flow primer: four ways things move

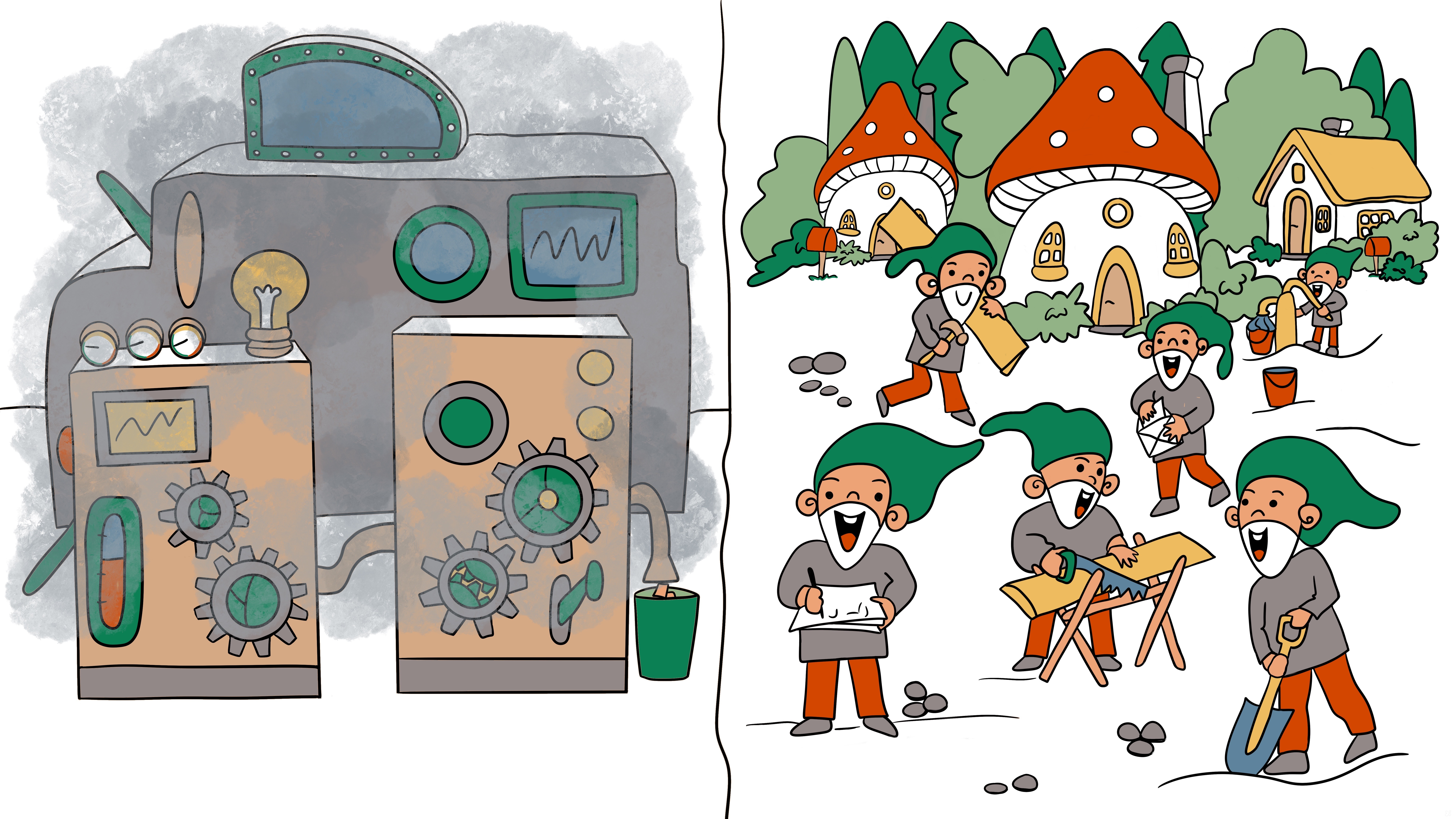

When you think in processes, a system splits into domains that own resources and flows that move work. A flow tells you how facts, intents, and control cross a domain boundary. Four flows cover day-to-day design: data flow, message flow, process flow, and call flow.

The same topology looks different depending on which flow you inspect. Call flow covers the synchronous reply path, message flow covers the async fan-out, data flow tracks typed payloads, and process flow explains who runs each step.

Data flow

Data flow answers “what moves.”

It describes the schema and lineage of facts as they travel through the system. Define which domain publishes which fact, how versions evolve, where you materialize views, and how you replay history if something breaks. At the boundary, the producing domain publishes only the data it owns; the consuming domain derives its own model instead of poking at another domain’s private state.

Message flow

Message flow answers “who talks to whom and with what guarantees.”

It defines the protocols between resource-owning gnomes. Decide whether a payload is a command or an event, whether ordering matters, how to correlate, how to retry, and what to do with dead letters.

Message flow is the civility layer: domains ask each other for help through documented messages and accept the delivery guarantees the protocol provides.

Backpressure contract

Backpressure is a protocol, not an implementation detail. The contract lives in message flow and defines how senders must slow down.

Outcomes for enqueue across a boundary:

ok— accepted now.{busy, RetryMs}— not accepted; sender should back off for ~RetryMs.{queued, Pos}— accepted into a queue; sender may pace based onPos.

Async variant: cast carries {ReplyTo, Ref}; the server replies with {ack, Ref} or {busy, Ref, RetryMs}.

Idempotency: all retries carry the same idem_key. Receivers must dedup.

Sender behavior: on {busy, RetryMs}, jittered sleep; on timeouts,

switch to exponential backoff; abandon after a budget.

The callee decides ok, busy, or queued based on its process-flow limits (bounded queues, concurrency caps).

Synchronous patterns (caller waits)

- call with {ok|busy, RetryMs} contract for explicit backpressure.

- Bounded concurrency (semaphore) around the callee; caller blocks until a slot frees.

- Hedged calls with budget (fail fast if downstream is slow; no retries beyond budget).

- Token-bucket at the caller (rate-limit before sending).

- Inline validation+reject (cheap checks sync; expensive work deferred async).

Asynchronous patterns (caller doesn’t wait)

- Job ID + completion event (done|failed later to a notifier).

- Credit/pull-based demand (consumer grants credits; producer sends only with credit).

- Outbox submit + occasional status query (running|done|failed) outside the hot path.

- Monitor the worker (react to DOWN or completion messages; no send-time blocking).

- Pressure subscription (publish high|mid|low; senders pace heuristically).

- TTL/deadline in payload (receiver drops/defers if expired; sender uses compensations).

- Queue TTL + DLQ (expired work is rerouted; upstream metrics trigger pacing).

Tiny sync call template (example shape only; the contract is defined above):

%% Sync protocol

handle_call({enqueue, Msg, Idem}, _From, S=#state{q=Q}) ->

case queue:len(Q) >= ?MAX of

true -> {reply, {busy, 50}, S};

false -> {reply, ok, S#state{q=queue:in({Idem,Msg}, Q)}}

end.

Process flow

Process flow answers “who does the work and how it survives failure.”

It is the topology and lifecycle of the workers. Define where workers live, who supervises them, how they restart, how you cap concurrency.

Pick the supervisor strategy that matches dependency: one_for_one for isolated workers, rest_for_one when downstream children depend on an upstream owner, and one_for_all when the group must rise and fall together. Use a Dynamic Supervisor when children are created at runtime.

Set each child’s restart type: permanent (always), transient (only on crash), or temporary (never). Combine with a restart budget (max_restarts / max_seconds) per subtree to cap blast radius. Add backoff with jitter on restarts to avoid herd effects. Choose shutdown timeouts per role: short for stateless workers, longer for stateful owners that must flush.

When we discuss process types in a future post we will also talk about supervision tree structures.

Call flow

Call flow answers "how control and data move inside code."

Scope it to a single domain: from the entry point to the exit value, including timing, cancellation, and error policy. At a domain boundary, switch to message flow (that’s where backpressure and delivery semantics live); don’t stretch a synchronous chain across domains.

Design the path first, then the code:

Keep the hot path short and explicit. Do validation and cheap lookups inline; push slow or blocking work to workers. Set an end-to-end budget and per-hop timeouts that fit inside it. Propagate context (trace_id, tenant, deadline) through every call so lower layers can fail fast instead of queuing doomed work. Make retries deliberate and only for idempotent steps; prefer fallbacks or compensation over deep retry ladders. Avoid re-entrant call cycles and synchronous “ping-pong” between processes—those become invisible locks.

Three common shapes cover most cases. Straight-through: a request owner runs a small stack of pure transforms and one side-effect at the edge. Short async detour: issue a local task and rejoin before the budget ends (still inside the domain). Cross-domain work: cut the call, emit a message, and return; any further coordination belongs to message flow.

A quick checklist when you cut a path: define the return value and error space; set an end-to-end deadline and per-hop timeouts; decide where retries are allowed (and prove idempotency); push blocking work off the scheduler; propagate trace_id and deadline; and terminate the call at the boundary.

Instrumenting the flows

Put the four flows around the same boundary and they reinforce each other. A call enters a domain, triggers messages to other domains, moves data through transformations, and runs on supervised processes. Each flow covers a different design question, but they all point to the same contract.

Every cross-domain message carries the same metadata so tracing, retries, and upgrades stay predictable:

-record(msg_meta, {

trace_id :: binary(),

span_id :: binary(),

causation_id :: binary(),

schema_version :: integer(),

idem_key :: binary(), % for deduplication

sent_at :: integer() % unix ms

}).

Tooling hooks sit where the flows live:

- Trace IDs and causation IDs follow message flow across domain boundaries.

- Mailbox depth, queue lengths, and throughput expose process-flow pressure and health.

- Supervision trees double as topology diagrams and recovery plans.

- Schema registries, contract tests, and version dashboards keep data flow honest.

- Endpoint timeouts and breaker dashboards make call flow visible and tunable.

Use this primer as a checklist every time you cut a boundary. Define the data contract, message protocol, process topology, and call budget. Once those are in place, the gnomes can work without stepping on each other’s toes.

Highlight the idempotency contact points: the request owner refuses to start the same job twice, the ledger ignores duplicate trace IDs, and the PSP adapter retries safely. Once those guardrails are in place, replays and retries stop being scary.

Anti-patterns and fixes

Data flow: Probing foreign ETS tables ties domains together and guarantees stale reads. Publish the facts your domain owns, let other domains subscribe, and derive local views from the feed.

Message flow: Fire-and-forget without correlation means you never know which request triggered which side effect. Always include correlation/causation IDs and route failures to a dead-letter queue so you can replay with context.

Process flow: Unbounded mailboxes hide backpressure until the VM falls over. Cap queues, drop or park excess work deliberately, and surface the metrics so upstream request owners can slow down—the same metrics feed the message-flow backpressure contract.

Call flow: Synchronous chains that cross domain boundaries turn every deployment into a distributed transaction. Cut the boundary, emit a message plus an outbox write, and let the receiving domain drive its own timeline.

Flow checklist

| Flow type | Design focus |

|---|---|

| Data flow | Owner, schema & version, evolution plan, replay/materialization strategy |

| Message flow | Command vs event, ordering key, retry/backoff, DLQ, idempotency rule |

| Process flow | Supervision strategy, concurrency limits, mailbox bounds, backpressure signal |

| Call flow | Sync vs async, timeout budget per hop, breaker policy, fallback/compensation |

Where to next

If you have not read the setup, start with Gnomes, Domains, and Flows and Domains Own Code and Data. When the flows make sense, jump to Putting It Together for a payments-style walkthrough, then stay tuned for the process-archetype deep dive.

Prev: Domains Own Code and Data | Next: Gnomes, Domains, and Flows: Putting It Together